Motivation

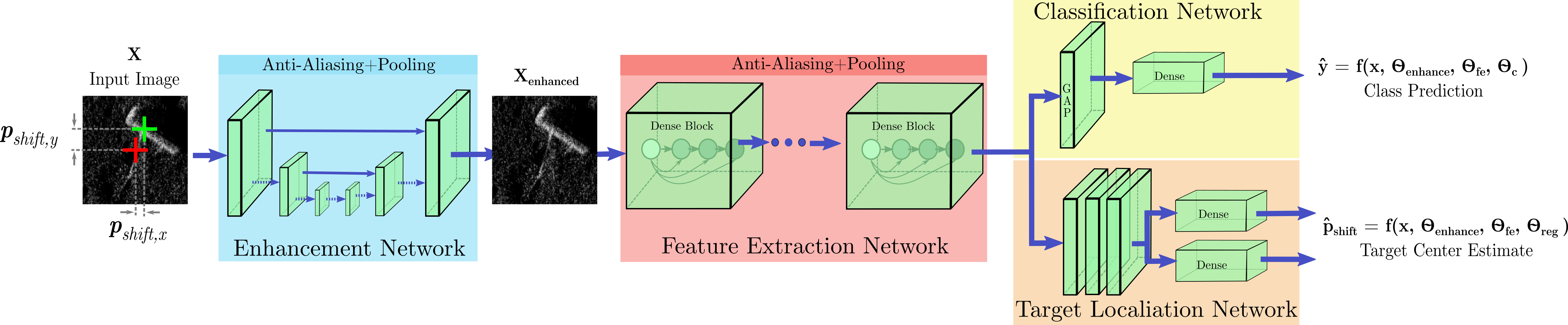

Image enhancement of Synthetic Aperture Sonar (SAS) images through despeckling is often used to improve image interpretability for humans. We ask the question, Is there an image enhancement function which improves classification? to which we will answer in the affirmative (results in table below). To this end, we incorporate a data adaptive image enhancement network with a self-supervised, domain-specific loss to an existing classification network for purposes of improving classification performance. Our image enhancement function is learned from the data removing the onerous task of selecting a fixed despeckling algorithm. Furthermore, ground truth noise/denoised image pairs as required by previous methods are not needed.

Most SAS classifiers only determine the presence of a target object but are aloof to where in the image it appears. To this end, we incorporate a target localization network in addition to a classification network for the purpose of also improving classification performance. Like the image enhancement network, this is also trained using a self-supervised, domain-specific loss. Now, our classifier not only learns target class, but also target position thus acquiring scene context. Our target localization network is trained using the domain knowledge that objects are centered when passed to the classifier. We use the common data augmentation technique of image translation through cropping to induce new target positions when training, and have the target localization network estimate the induced target position in addition to the primary task of classification. This encourages the model to learn where of the scene in addition to the what.

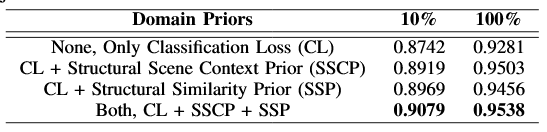

We train the two aforementioned networks and the classification network simultaneously through the addition of regularization terms to the primary classification loss objective function. This makes our formulation self-supervised and thus requires no extra data or labels making it suitable as a drop-in replacement for training against existing datasets. The table below shows through ablation that each domain-specific loss improves classification performance and that when combined, the best classification performance is achieved.

Our netework ingests a SAS image tile, enhances it using a data-adaptive and jointly learned transform, and outputs the object class and object position in the image tile.

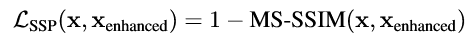

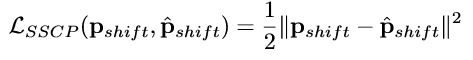

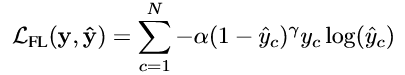

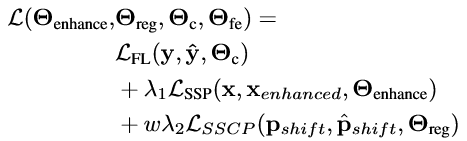

Loss Function

Final loss function used to train SPDRDL.

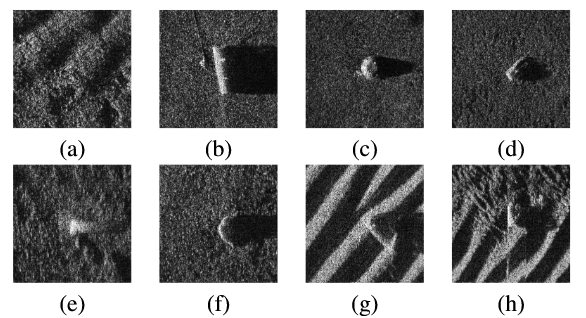

Image Samples

Synthetic aperture sonar (SAS) is capable of producing high-quality, high-resolution imagery of seafloor and objects. The images here are examples collected from the Centre for Maritime Research and Experimentation MUSCLE system. In this work, we provide an ATR algorithm to classify MUSCLE images into four classes. Example objects from each class are shown: (a) background, (b) cylinder, (c) truncated cone, and (d) wedge. Difficulties in classification result because there are often objects which appear target-like (e,f), but are not targets (false alarms); and some targets are difficult to discern (g,h) because of orientation, burial depth, or background topography causing them to be ignored (missed detections).

Experimental Results

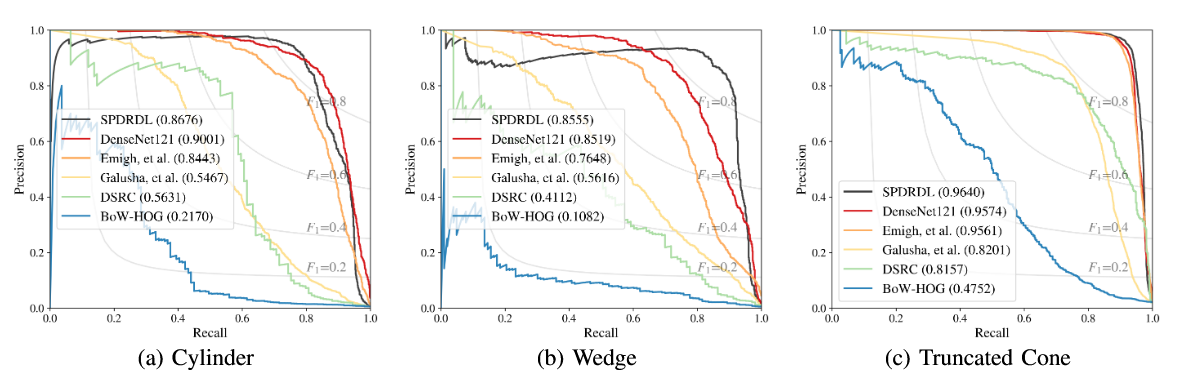

Above shows precision-recall curves for the three object classes in a one-vs-all scenerio. AUCPR is in parentheses; higher numbers are better.

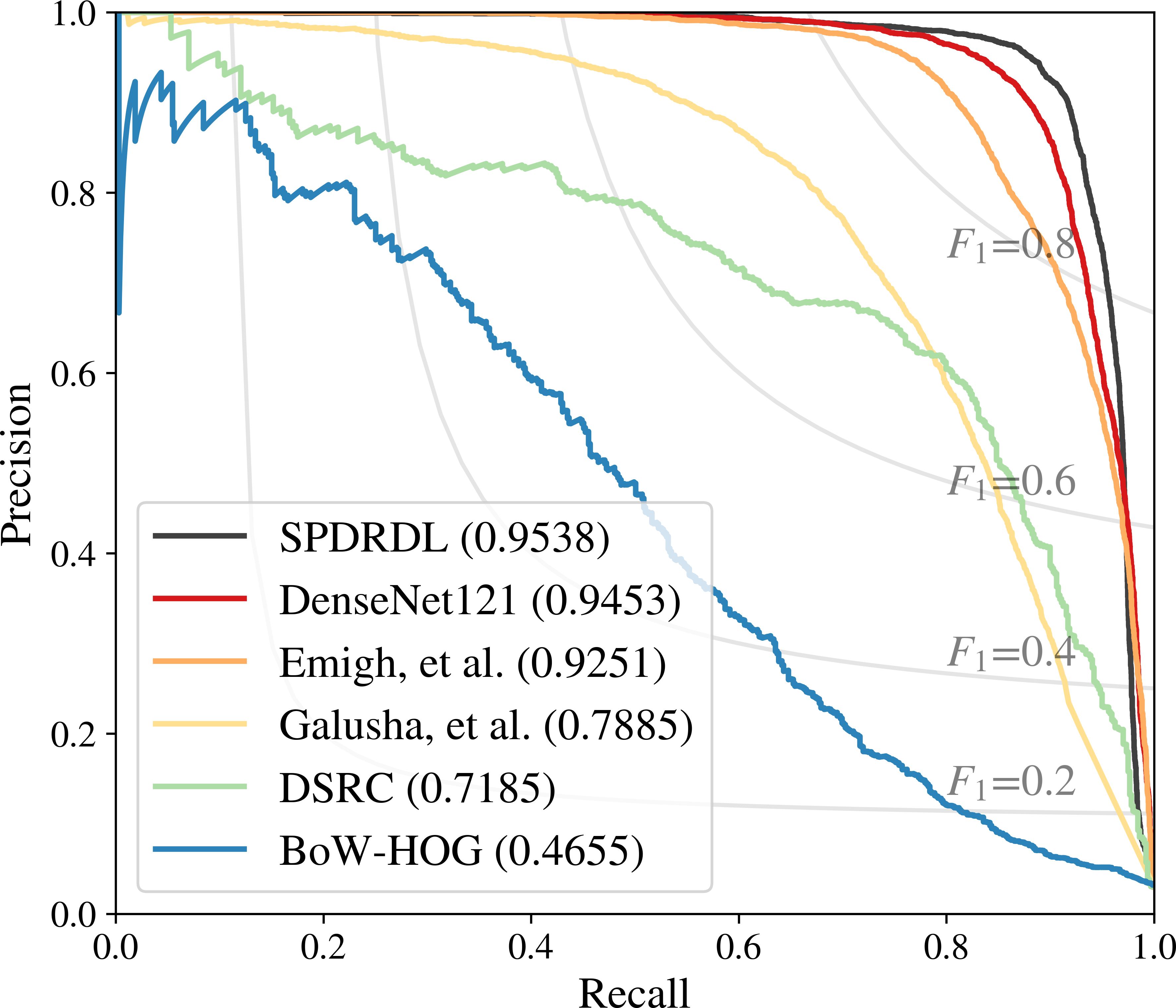

(left) We examined precision-recall curves for one-vs-all scenerio of target class vs background. (right) We also examined AUCPR as a function of range. For both plots, higher numbers indicated better performance.

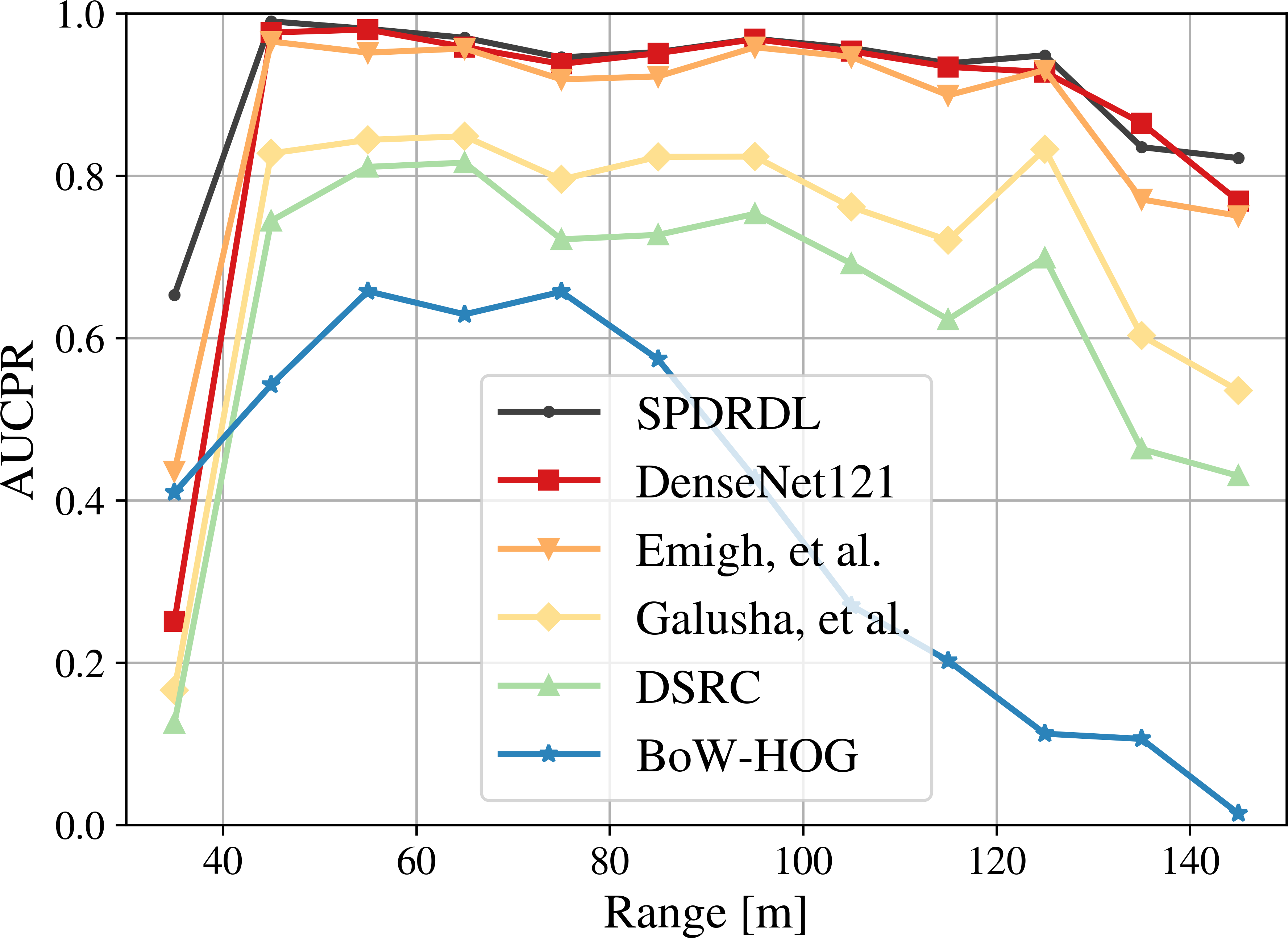

We examined AUCPR in a limited training data scenerio whereby only 10% of the training data is available. We find our method scales gracefully while maintining the best performance of all the evaluated methods.

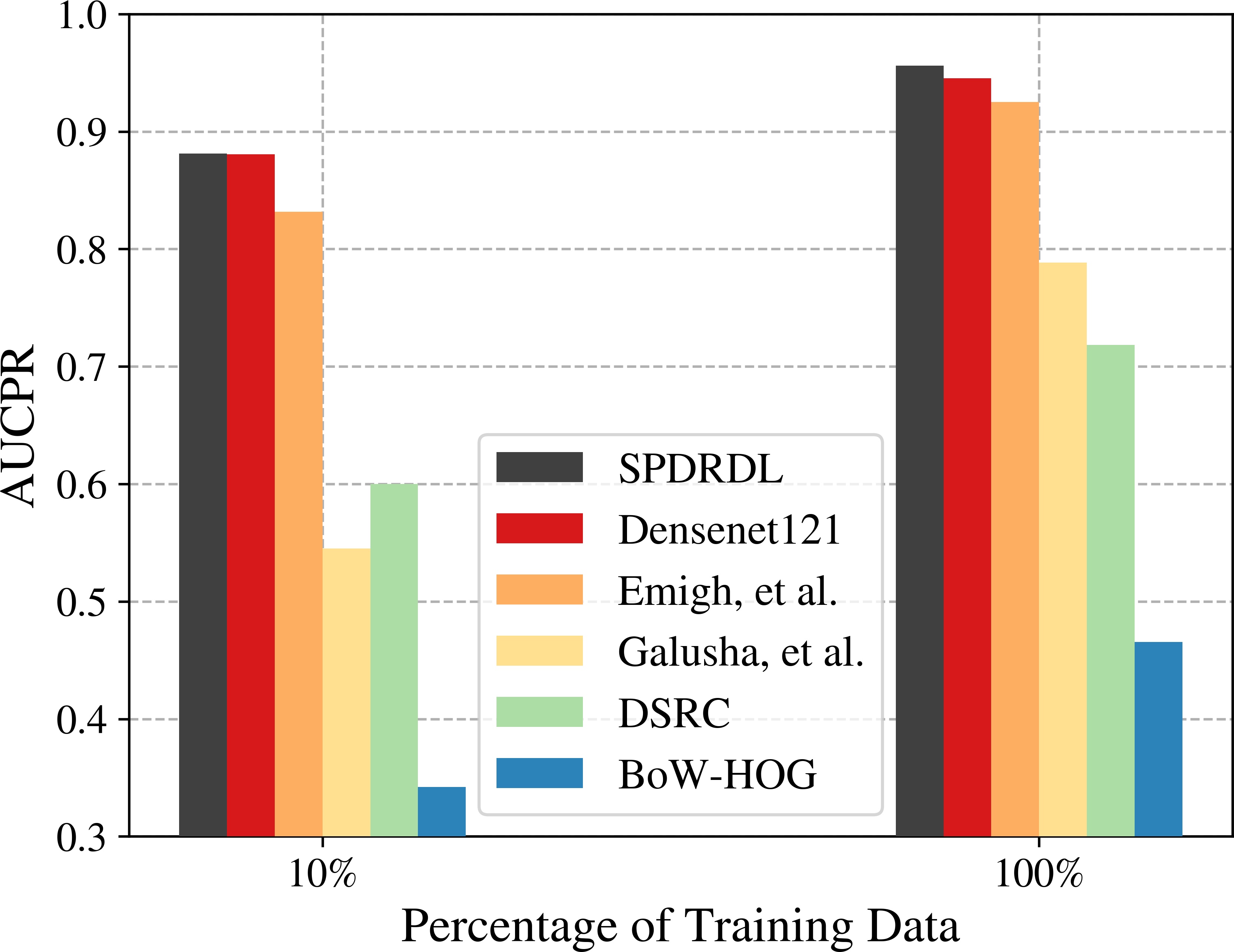

We performance an ablation study to understand the performance benefit of each domain prior. This table reports AUCPR for the low (10%) and high (100%) training data scenerios; higher numbers indicated better performance.

Related Publications

I. D. Gerg and V. Monga, "Structural Prior Driven Regularized Deep Learning for Sonar Image Classification," To appear in IEEE Transactions on Geoscience and Remote Sensing. [arxiv]

I. D. Gerg, D. Williams, and V. Monga, "Data Adaptive Image Enhancement and Classification for Synthetic Aperture Sonar," IEEE IGARSS 2020.

Selected References

G. Huang, Z. Liuet et al., “Densely connected convolutional networks,” in Computer Vision and Pattern Recognition. IEEE, 2017, pp. 4700–4708. [IEEE Xplore]

M. Emigh, B. Marchandet et al., “Supervised deep learning classificationfor multi-band synthetic aperture sonar,” in Synthetic Aperture Sonar & Synthetic Aperture Radar Conference, vol. 40. Institute of Acoustics, 2018, pp. 140–147

A. Galusha, J. Daleet et al., “Deep convolutional neural network target classification for underwater synthetic aperture sonar imagery,” in Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XXIV, vol. 11012. International Society for Optics and Photonics, 2019, p. 1101205 [SPIE Digital Library]

J. McKay, V. Monga, and R. G. Raj, “Robust sonar ATR throughbayesian pose-corrected sparse classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 55, no. 10, pp. 5563–5576, 2017 [IEEE Xplore]

J. C. Isaacs, “Sonar automatic target recognition for underwater UXO remediation,” in Computer Vision and Pattern Recognition Workshops. IEEE, 2015. [IEEE Xplore]