Source Code

Source code for SDL and the training and test data could be found at Github.

Proposed Model

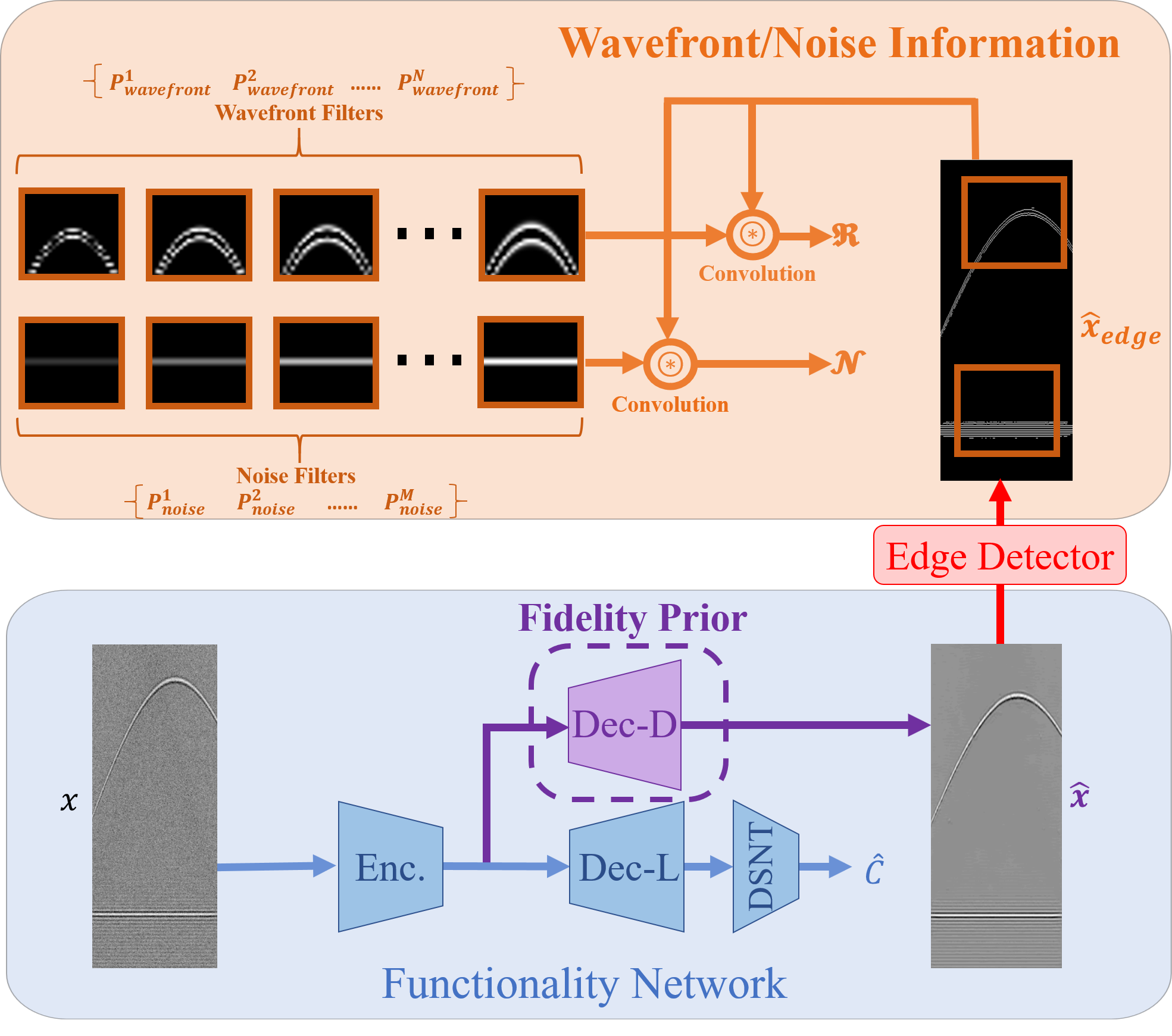

Figure. simultaneous denoising and localization.

Data Sets

Four different photoacoustic datasets are discussed in this project:

Allman et al's dataset (ref. 1).

Johnstonbaugh et al's dataset (ref. 2).

Our new practically representative simulated dataset.

Experimentally captured dataset.

Simulated Dataset Generation

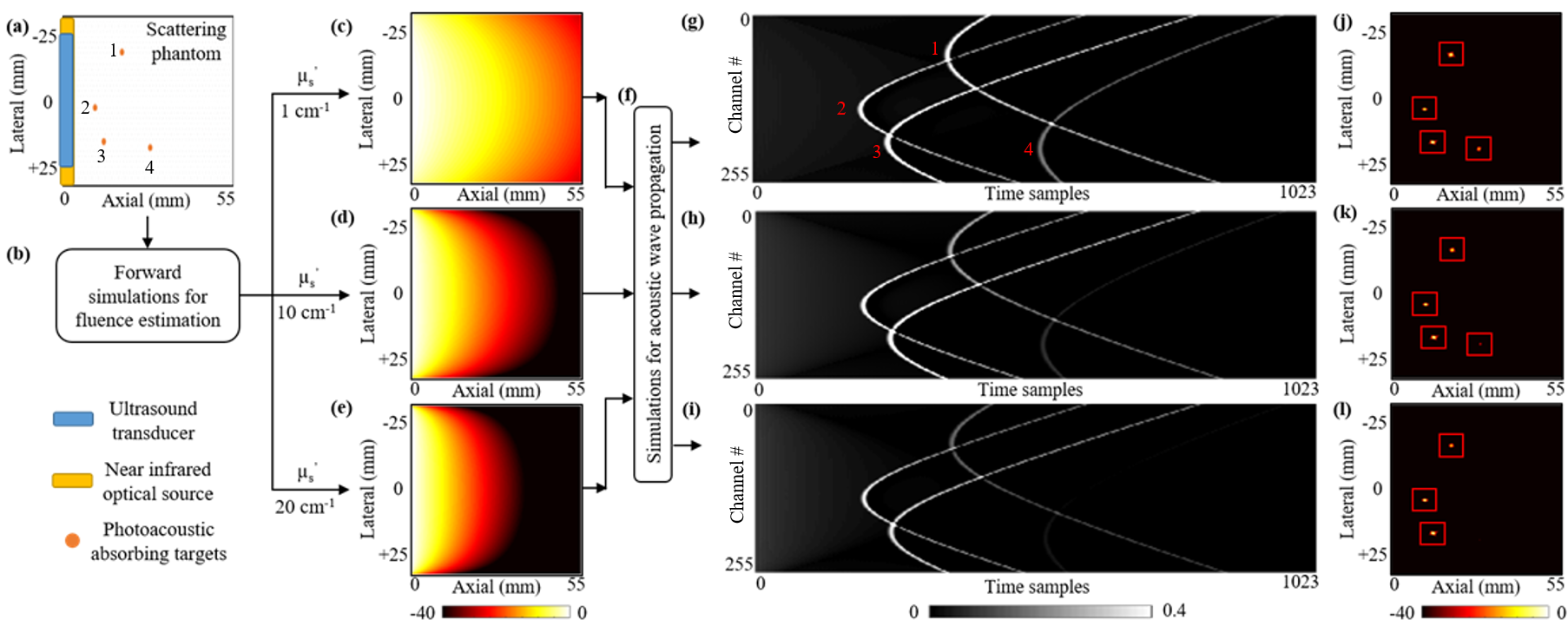

Figure. Details of generating samples with random number of targets and different scatteing levels and their corresponding beamformed images.

Selected Results

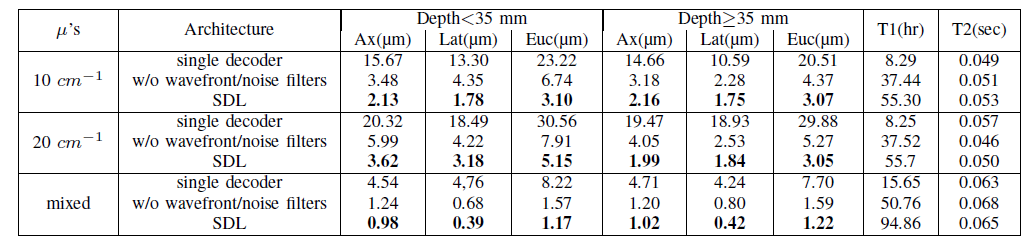

Table. Ablation study results of SDL and other variants over our simulated dataset.

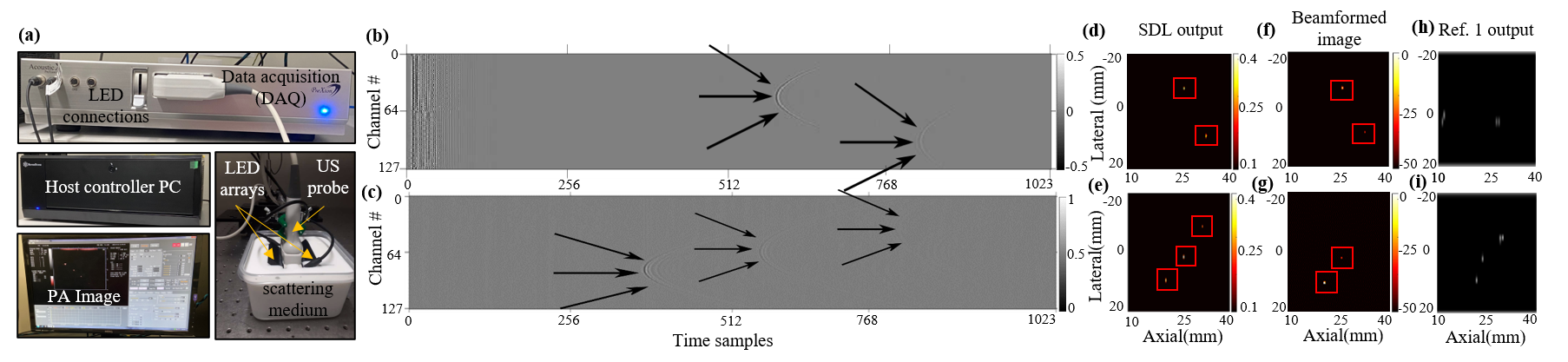

Figure. Performance comparison between SDL, beamforming, and ref. 1.

Related Publications

Amirsaeed Yazdani, S. Agrawal, K. Johnstonbaugh, R. Kothapalli, and V. Monga, "Simultaneous Denoising and Localization Network for Photoacoustic Target Localization," to appear in IEEE Transactions on Medical Imaging, 2021. [arXiv] [early access]

Selected References

D. Allman et al., "Photoacoustic source detection and reflection artifact removal enabled by deep learning," IEEE Trans. on Medical Imaging, vol. 37, no. 6, pp. 1464–1477, 2018.

K. Johnstonbaugh et al., "A deep learning approach to photoacoustic wavefront localization in deep-tissue medium," IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control. pp. 1–1, 2020.

N. Awasthi et al., "Deep neural network-based sinogram super-resolution and bandwidth enhancement for limited-data photoacoustic tomography,"IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control., ol. 67, no. 12, pp. 2660–2673, 2020.

All rights reserved Ⓒ iPAL 2018