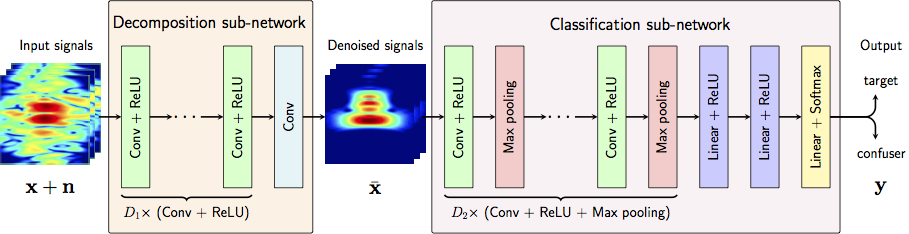

Network Structure

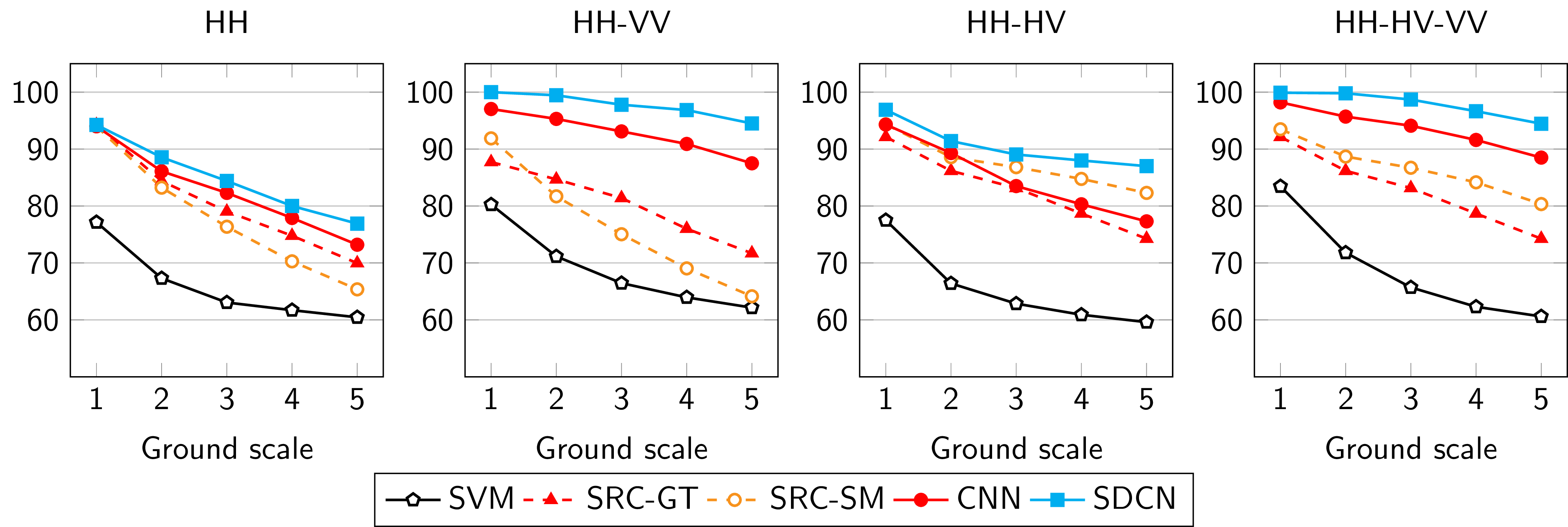

Evaluations

Classification accuracy (%) as function of number of noise levels and polarization combinations.

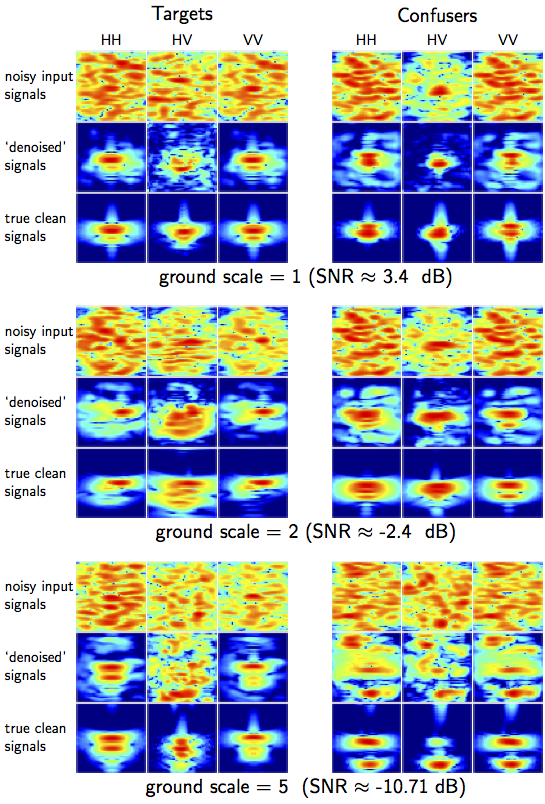

SR Results

Visualization of signals after training SDCN. Left column - targets, right column - confusers. Row 1 -- ground scale = 1 (easy), row 2 -- ground scale = 2 (less easy), row 3 -- ground scale = 5 (very difficult). In each 3x3 block: top row -- noisy input signals, middle row -- denoised signals, bottom row -- ground truth, left column -- horizontal transmitter, horizontal receiver (HH), middle column -- horizontal transmitter, vertical receiver (HV), right column -- vertical transmitter, vertical receiver (VV).

Related Publications

T. H. Vu, T. Guo, L. Nguyen, and V. Monga, "Deep Network for Simultaneous Decomposition and Classification in UWB-SAR Imagery," accepted to the IEEE Radar Conference,, Oklahoma City, April 2018. [paper]

Selected References

K. Zhang, W. Zuo, Y. Chen, D. Meng, and L. Zhang, “Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising,” IEEE Transactions on Image Processing, 2017.[paper].

L. H. Nguyen, M. Ressler, and J. Sichina, “Sensing through the wall imaging using the army research lab ultra-wideband synchronous impulse reconstruction (uwb sire) radar,” Proceedings of SPIE, 6947, no. 69470B, 2008.

C. Dong, C. C. Loy, K. He, and X. Tang, “Learning a deep convolutional network for image super-resolution,” in Computer Vision–ECCV 2014, pp. 184–199, Springer, 2014. [paper]

T. H. Vu, L. Nguyen, C. Le, and V. Monga, “Tensor sparsity for classifying low-frequency ultra-wideband (uwb) sar imagery,” in Radar Conference (RadarConf), 2017 IEEE. IEEE, 2017, pp. 0557–0562.

T. H. Vu, H. S. Mousavi, V. Monga, U. Rao, and G. Rao, “Histopathological image classification using discriminative feature- oriented dictionary learning,” IEEE Transactions on Medical Imaging, vol. 35, no. 3, pp. 738–751, March, 2016.

T. H. Vu and V. Monga, “Learning a low-rank shared dictionary for object classification,” accepted to International Conference on Image Processing, 2016.

T. Vu and V. Monga, “Fast low-rank shared dictionary learning for image classification,” arXiv preprint arXiv:1610.08606, 2016.[paper].