DICTOL - Dictionary learning toolbox

A  implementation of LRSDL and other well-known dictionary learning methods can be found in [this Github repository].

implementation of LRSDL and other well-known dictionary learning methods can be found in [this Github repository].

The  version of this toolbox. You can find it in [this Github repository].

version of this toolbox. You can find it in [this Github repository].

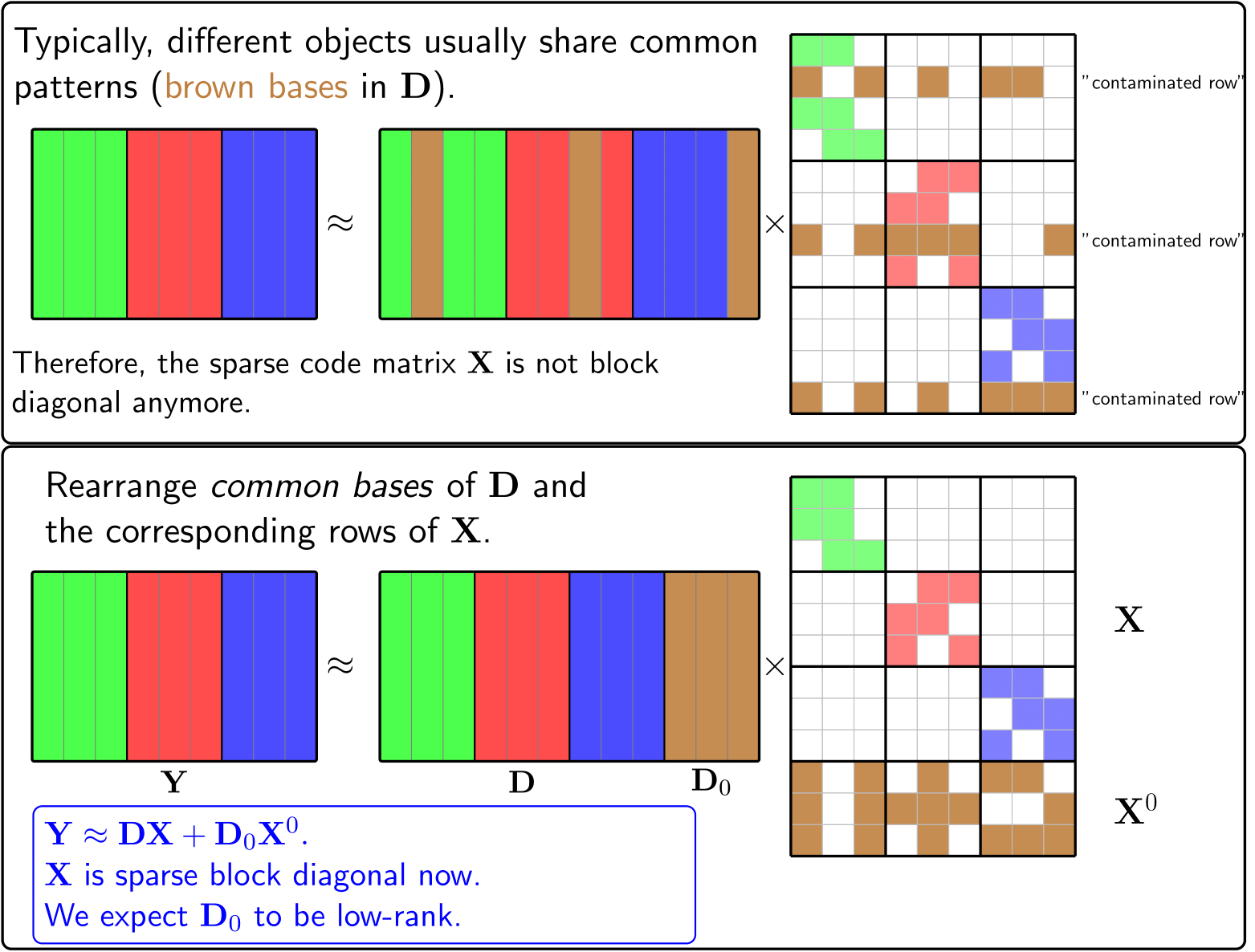

Motivation

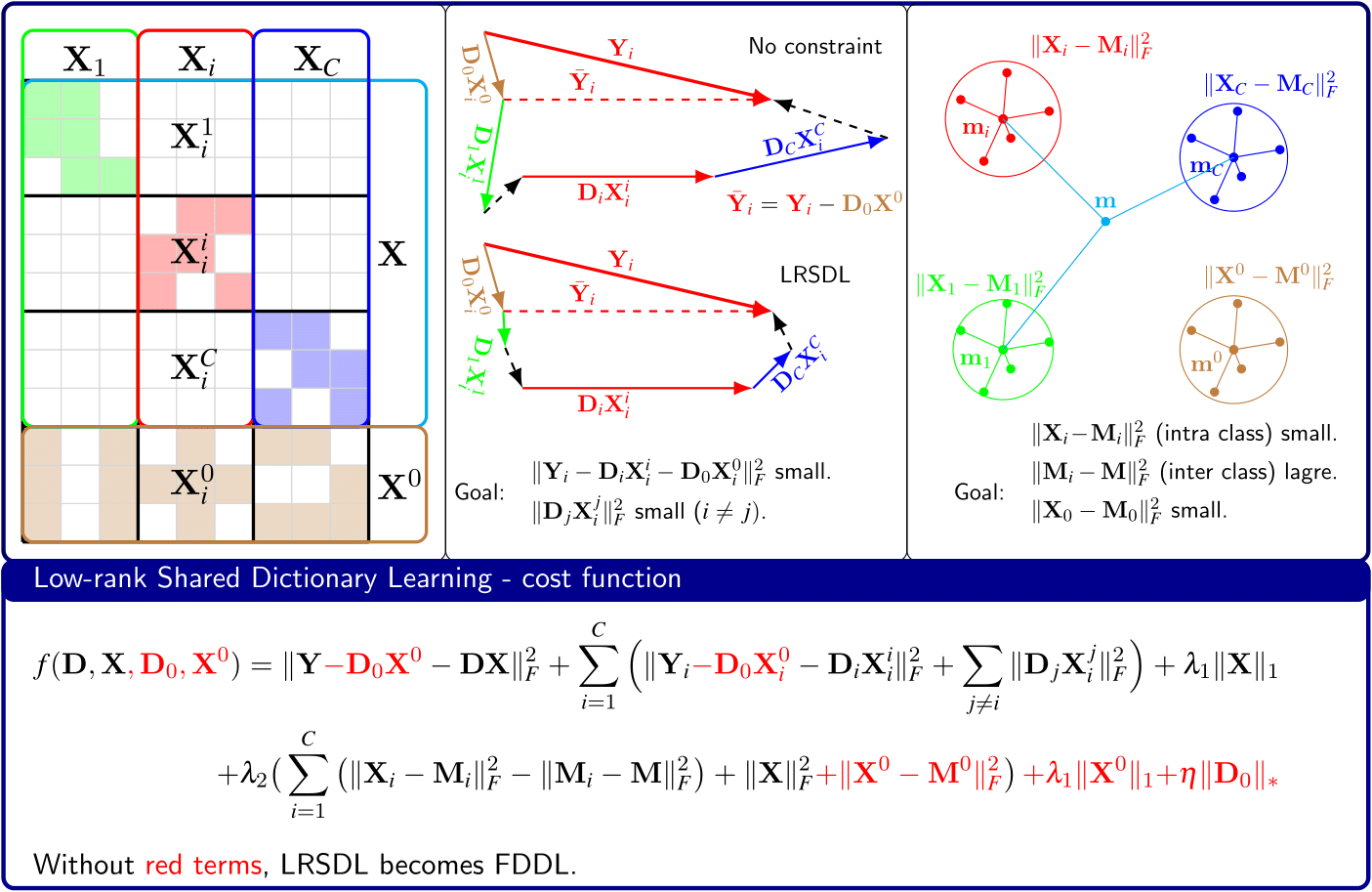

Idea Visualization & Cost Function

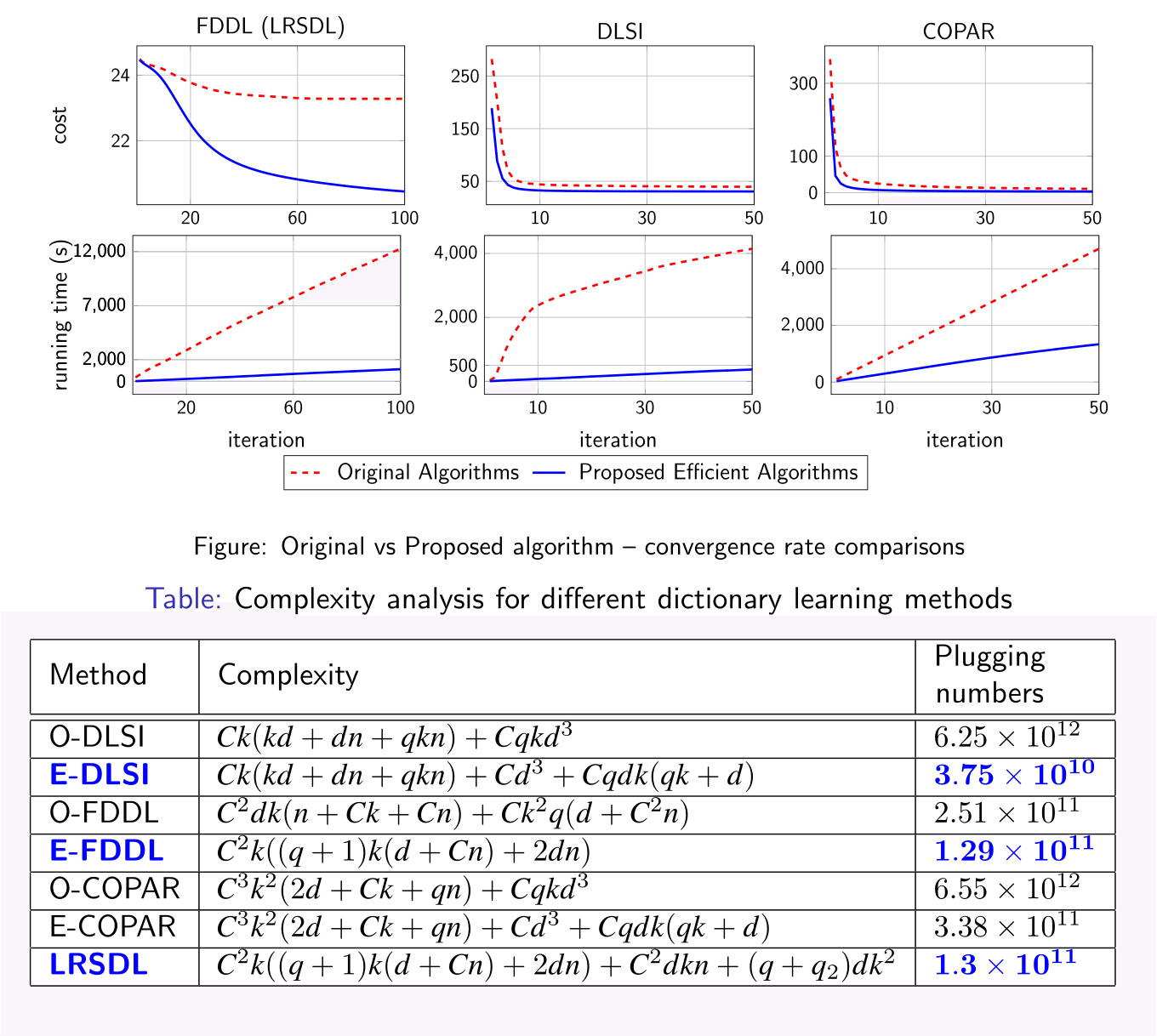

Convergence Rate & Computational Complexity

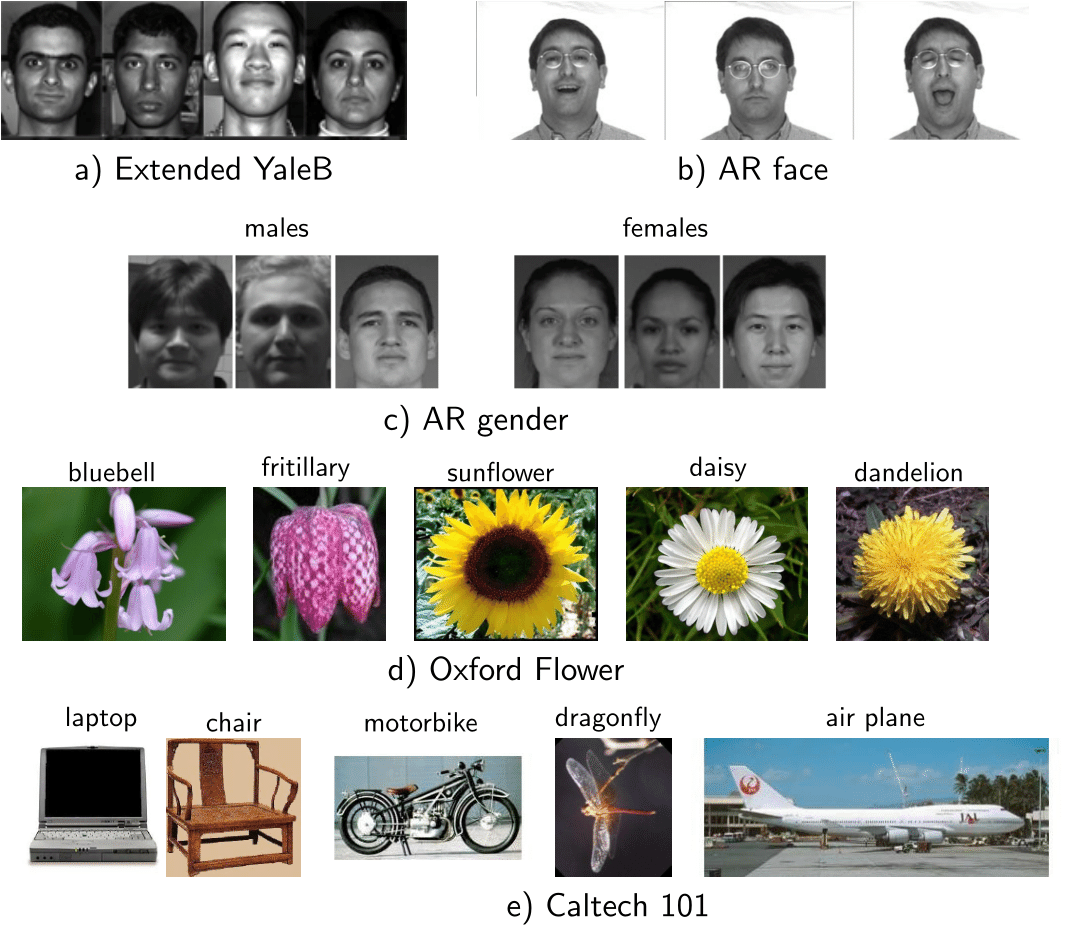

Samples from Widely-used Datasets

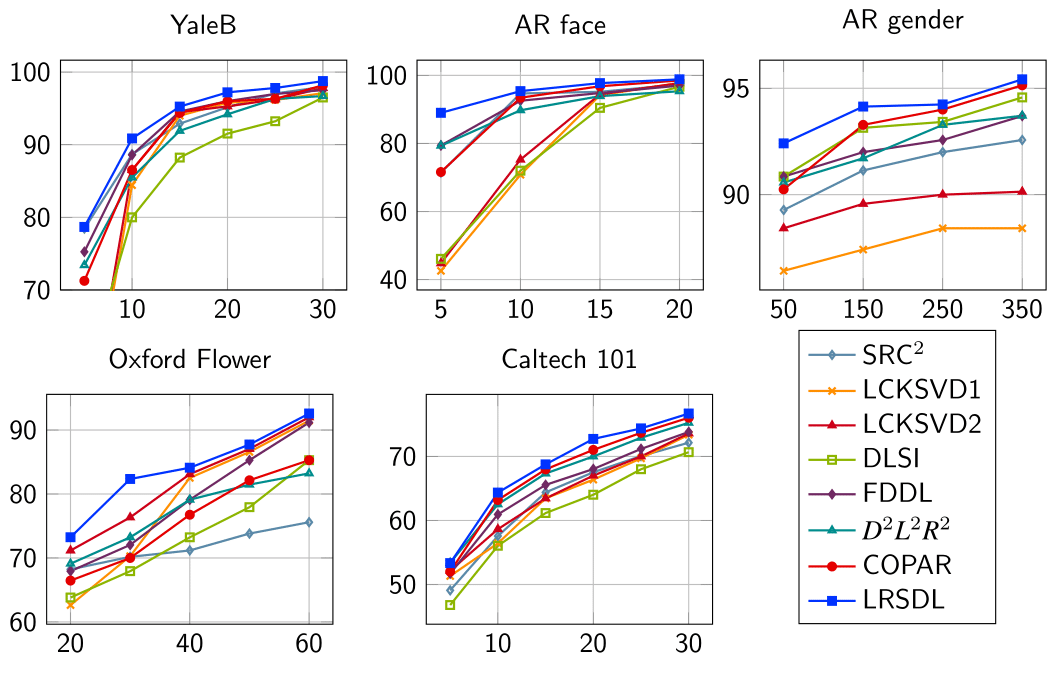

Classification Results

Related Publications

Tiep H. Vu, Vishal Monga. "Fast Low-rank Shared Dictionary Learning for Image Classification." IEEE Transactions on Image Processing, volume 26, issue 11, pages 5160-5175, November 2017 [paper].

Tiep H. Vu, Vishal Monga. "Learning a low-rank shared dictionary for object classification." IEEE International Conference on Image Processing (ICIP), pp. 4428-4432, 2016. [ paper], [ICIP poster].

Selected References

Wright, John, et al. "Robust face recognition via sparse representation." IEEE Transactions on Pattern Analysis and Machine Intelligence 31.2 (2009): 210-227. [paper].

Mairal, Julien, et al. "Online learning for matrix factorization and sparse coding." The Journal of Machine Learning Research 11 (2010): 19-60. [paper].

Jiang, Zhuolin, Zhe Lin, and Larry S. Davis. "Label consistent K-SVD: Learning a discriminative dictionary for recognition." IEEE Transactions on Pattern Analysis and Machine Intelligence, 35.11 (2013): 2651-2664. [project page].

Yang, Meng, et al. "Fisher discrimination dictionary learning for sparse representation." IEEE International Conference on Computer Vision (ICCV), 2011. [ paper].

Ramirez, Ignacio, Pablo Sprechmann, and Guillermo Sapiro. "Classification and clustering via dictionary learning with structured incoherence and shared features." IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2010. [paper].

Kong, Shu, and Donghui Wang. "A dictionary learning approach for classification: separating the particularity and the commonality." European Conference on Computer Vision. Springer Berlin Heidelberg, 2012. 186-199. [paper].

A singular value thresholding algorithm for matrix completion." SIAM Journal on Optimization 20.4 (2010): 1956-1982. [paper].

Beck, Amir, and Marc Teboulle. "A fast iterative shrinkage-thresholding algorithm for linear inverse problems." SIAM journal on imaging sciences 2.1 (2009): 183-202. [paper].

The Sparse Modeling Software [project page].