Motivation

SAS is a coherent imaging modality which is capable of producing high-resolution and constant-resolution images of the seafloor. The segmentation of these images plays an important role in analyzing the seabed environment. One challenge faced by SAS seabed segmentation is acquiring labeled data. Obtaining pixel-level labels is time-consuming and often requires a diver survey to obtain correct results. This lack of labeled data makes it difficult to deploy convolutional neural networks (CNNs) for SAS seabed segmentation as such methods usually require lots of labeled data to work well.

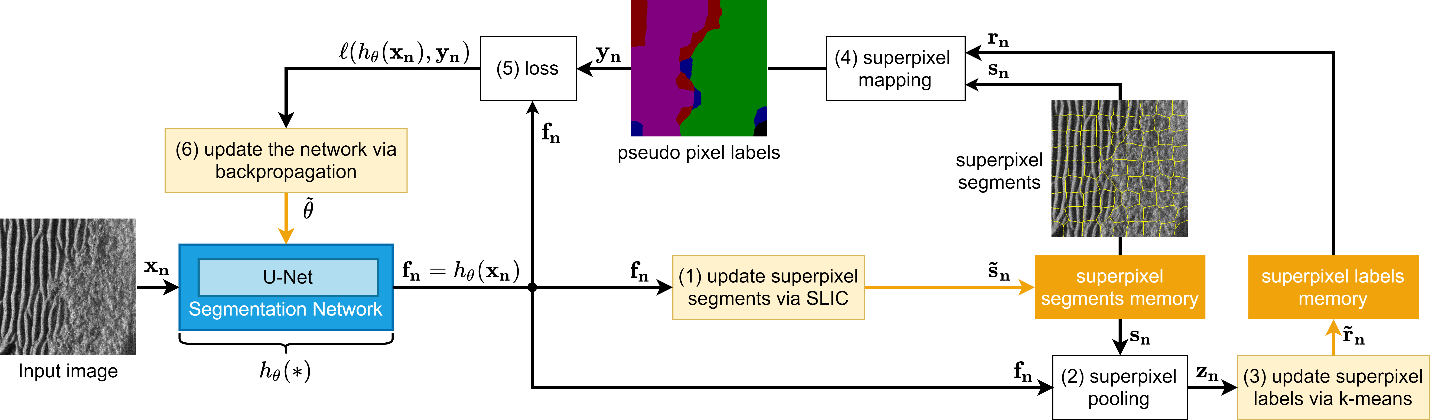

In this work, we propose a CNN-based technique that overcomes the need to train on large datasets of pixel-level labeled data to achieve success. Specifically, we note the correlation between class boundaries of superpixels and human notated segmentation of SAS. We devise a novel scheme that combines classical superpixel segmentation algorithms (which are non-differentiable) with deep learning (which is differentiable) to obtain a deep learning algorithm capable of using superpixel information of unlabeled pixels as a way to overcome the lack of abundant training data.

We evaluate our method, which we call iterative deep unsupervised segmentation (IDUS), against several comparison methods on a contemporary real-world SAS dataset. We show the compelling performance of IDUS against existing state-of-the-art methods for SAS image co-segmentation. Additionally, we show an extension of our method which can obtain even better results when semi-labeled data is present.

The above figure shows an iteration of the simplest version of IDUS. We store the superpixel segments and labels in the superpixel segments memory and superpixel labels memory, respectively. IDUS alternates between training a deep segmentation network and generating new pseudo-labels.

Dataset

The dataset consists of 113 high-resolution complex-valued SAS images acquired from a high-frequency (HF) synthetic aperture sonar (SAS) system deployed on an unmanned underwater vehicle (UUV). The images were originally 1001×1001 pixels in size which we downsample to 512×512 pixels for computational efficiency.

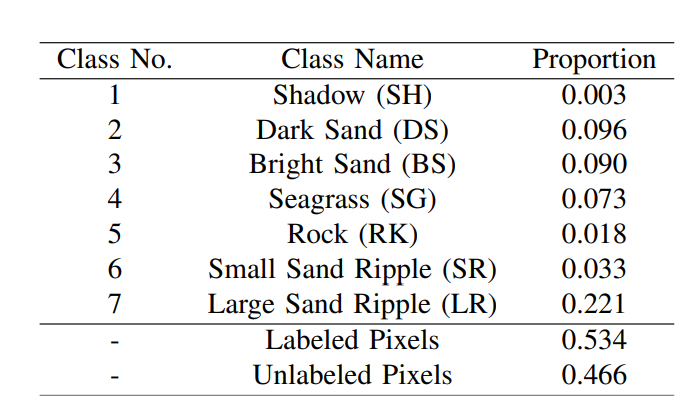

The above table shows the class names and corresponding sample proportions. The class distribution is highly imbalanced, especially for the Shadow class.

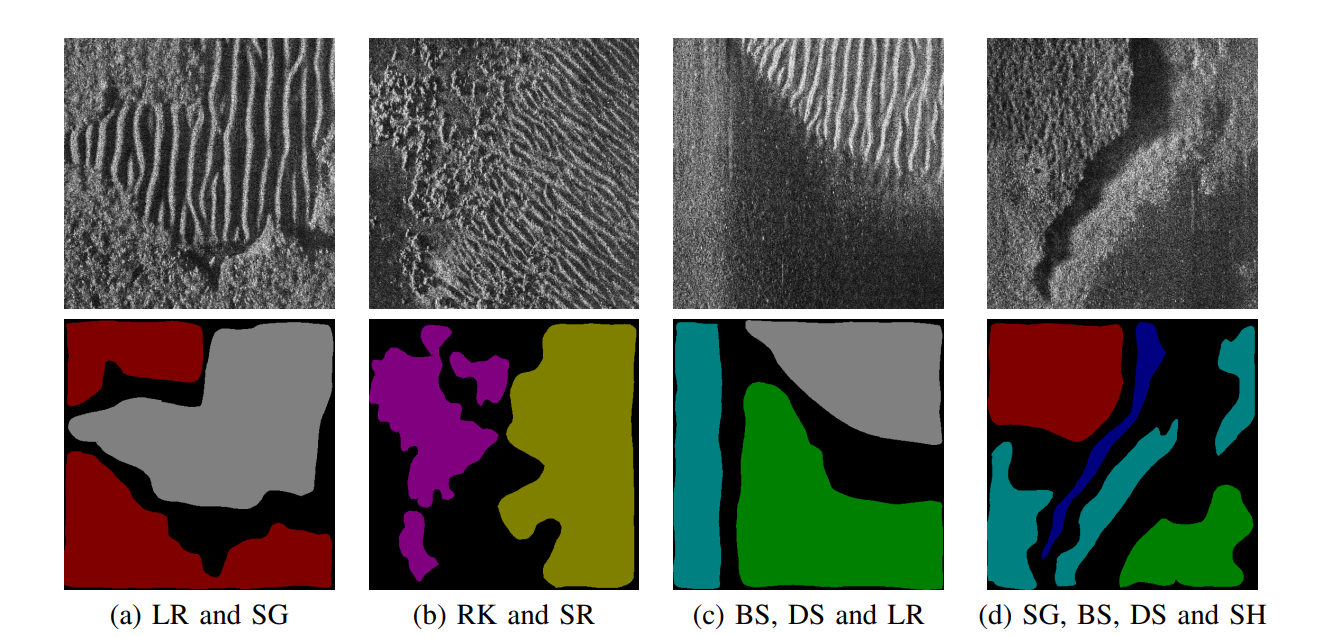

The above figure shows example SAS images with various textures: SH (blue), DS (green), BS (cyan), SG (red), RK (purple), SR (gold), LR (gray) and unlabeled pixels (black). Textures from left to right are: (a) large sand ripple and seagrass; (b) rock and small sand ripple; (c) bright sand, dark sand and large sand ripple; (d) seagrass, bright sand, dark sand and shadow.

Experimental Results

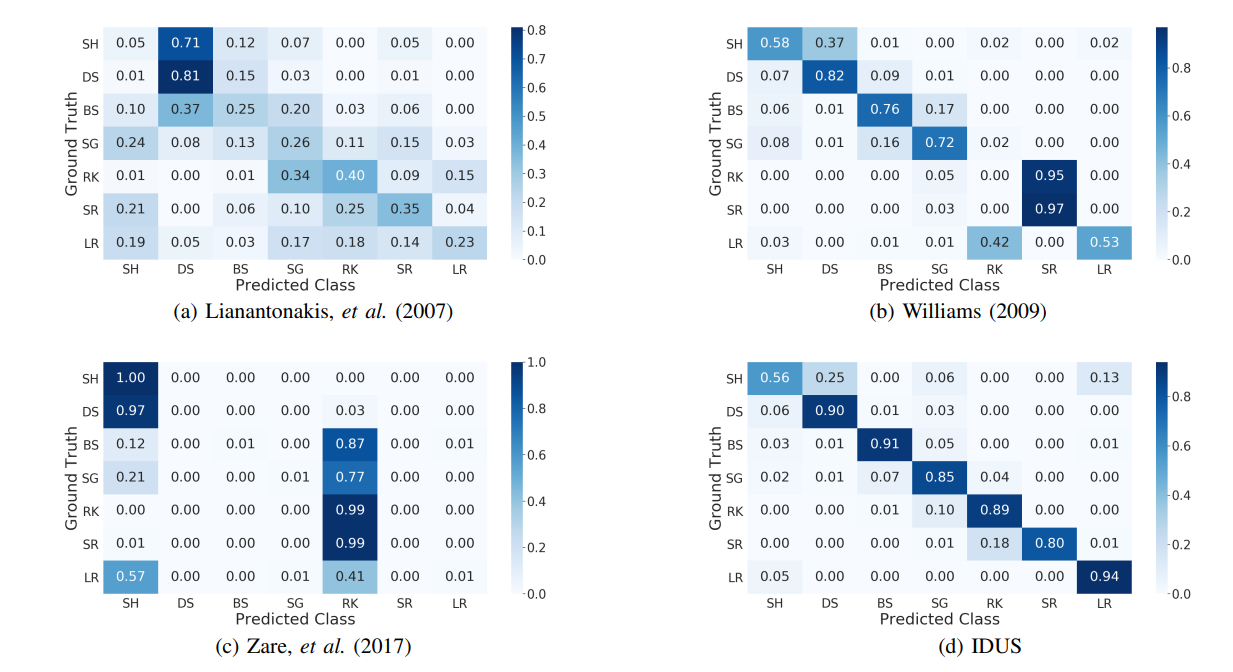

The above shows confusion matrices of IDUS along with comparisons against state of the art unsupervised methods.

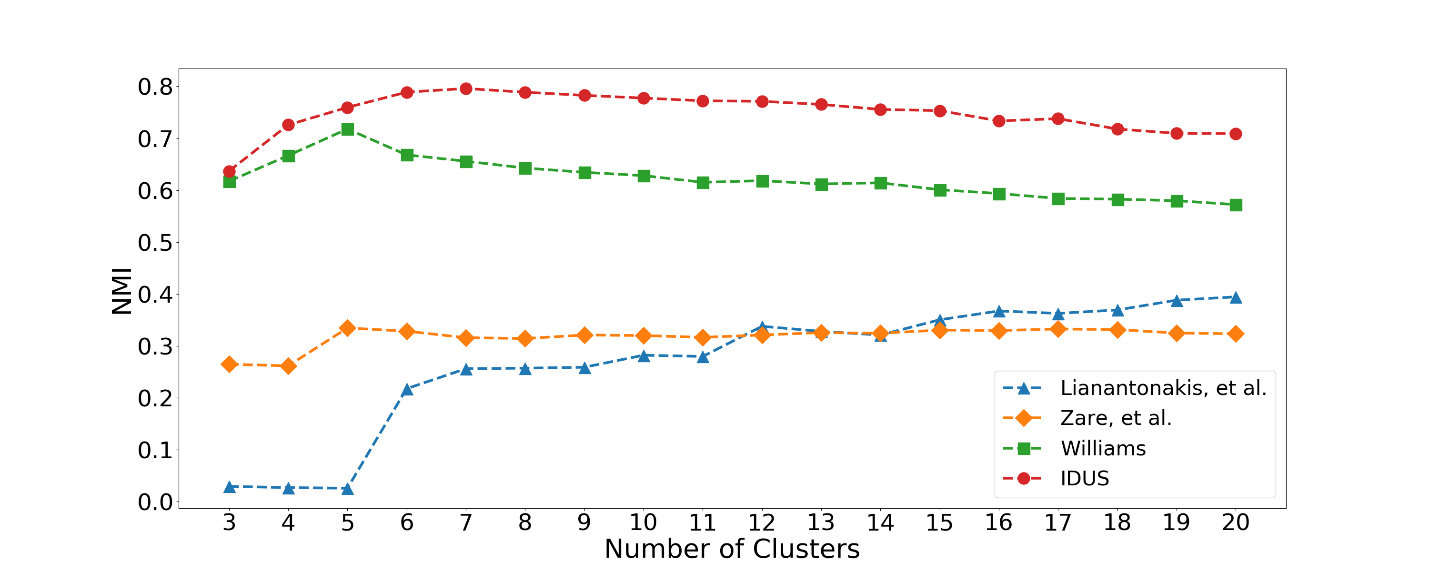

The above shows normalized mutual information (NMI) for various choices of cluster numbers. The results demonstrate that IDUS performs better than competing alternatives, independent of the number of clusters.

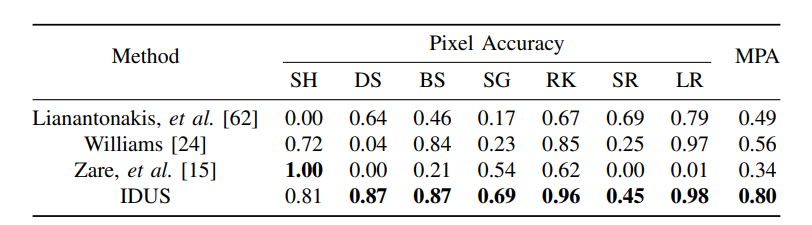

The above shows the pixel accuracies (PAs) of each class along with the corresponding mean pixel accuracy (MPA) for each method evaluated on the testing set (thirty-three sonar images for testing, eighty images for training). The best results for each class and best MPA are in bold. IDUS has the highest PAs for most classes and the best MPA demonstrating its superior performance over competing methods.

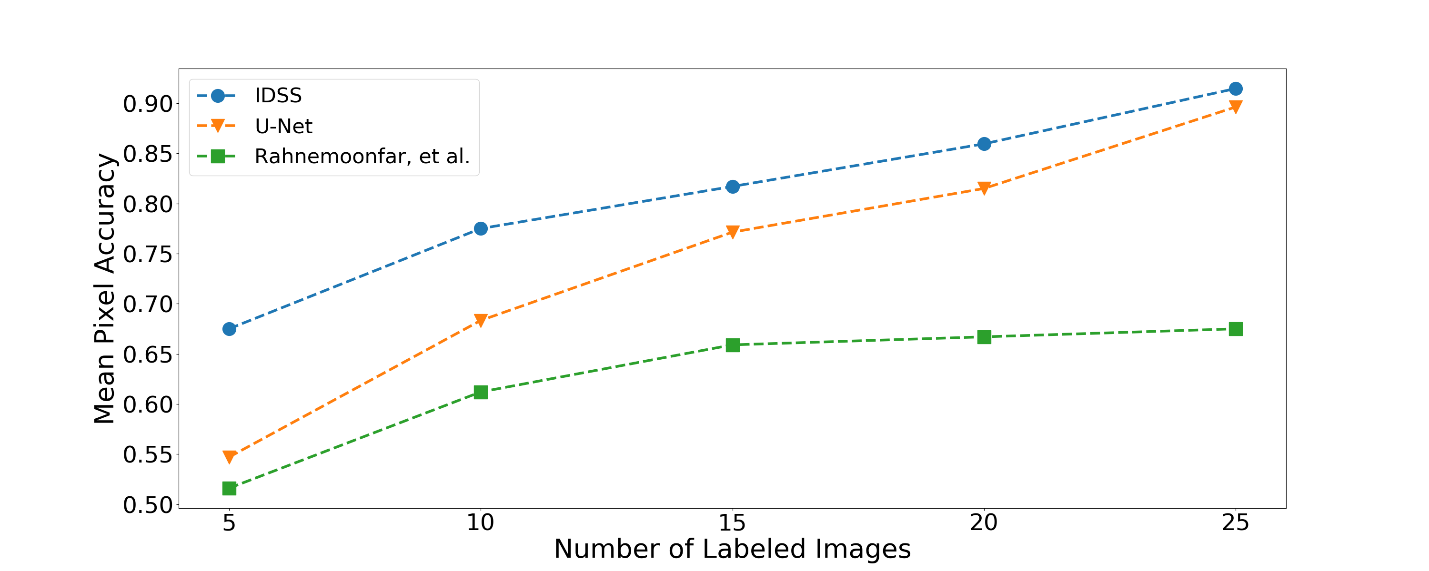

We use IDSS to fine-tune the network by using different size training sets of labeled data set to explore the performance improvement of combining IDUS with supervised training. The above shows mean pixel accuracy (MPA) for different training set sizes; higher values indicate better performance. IDSS(pre-trained on IDUS) outperforms comparison methods, especially in the case of very limited training (five samples).

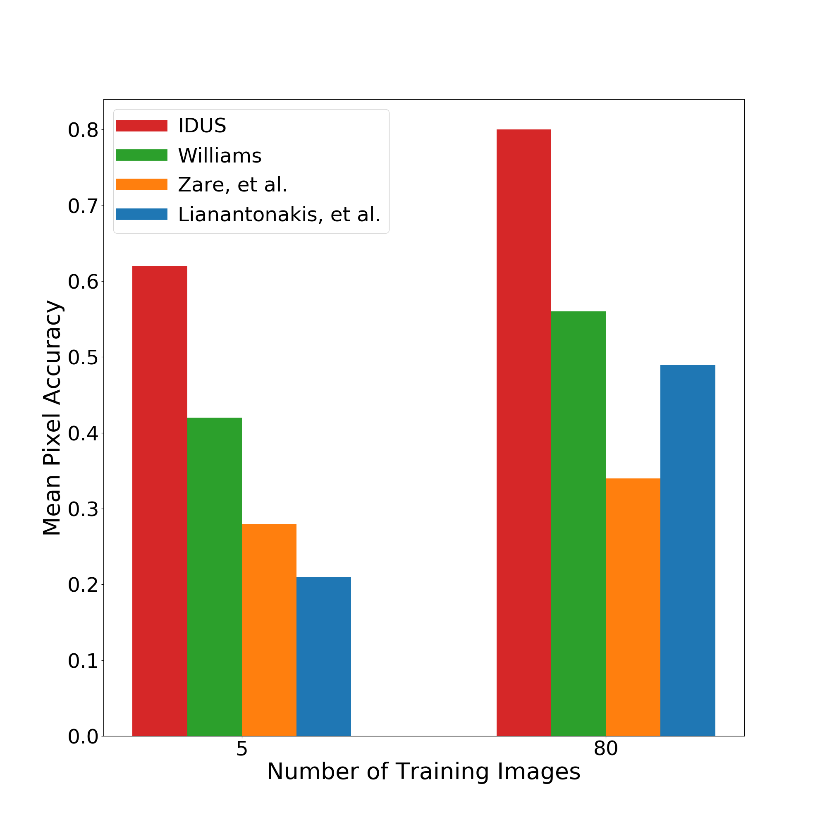

We examined mean pixel accuracy in a limited training data scenario whereby only 6.25% of the training data is available. As shown in the above figure, the benefits of IDUS over state of the art are even more pronounced when training is limited.

Related Publications

Y. -C. Sun, I. D. Gerg and V. Monga, "Iterative, Deep Synthetic Aperture Sonar Image Segmentation," in IEEE Transactions on Geoscience and Remote Sensing, doi: 10.1109/TGRS.2022.3162420. [IEEE Xplore, arXiv]

Y. -C. Sun, I. D. Gerg and V. Monga, "Iterative, Deep, and Unsupervised Synthetic Aperture Sonar Image Segmentation," OCEANS 2021: San Diego – Porto, 2021, pp. 1-5, doi: 10.23919/OCEANS44145.2021.9705927. [IEEE Xplore]

Selected References

O. Ronneberger et al., “U-net: Convolutional networks for biomedical image segmentation,” in Int. Conf. on Medical Image Computing and Computer-assisted Intervention. Springer, 2015, pp. 234–241.

M. Lianantonakis et al., “Sidescan sonar segmentation using texture descriptors and active contours,” IEEE Journal of Oceanic Engineering, vol. 32, no. 3, pp. 744–752, 2007.

D. P. Williams, “Unsupervised seabed segmentation of synthetic aperture sonar imagery via wavelet features and spectral clustering,” in IEEE International Conference on Image Processing, 2009, pp. 557–560.

A. Zare et al., “Possibilistic fuzzy local information C-Means for sonar image segmentation,” in 2017 IEEE Symposium Series on Computational Intelligence (SSCI). IEEE, 2017, pp. 1–8.

M. Rahnemoonfar et al., “Semantic segmentation of underwater sonar imagery with deep learning,” in IEEE International Geoscience and Remote Sensing Symposium, 2019, pp. 9455–9458.