Code

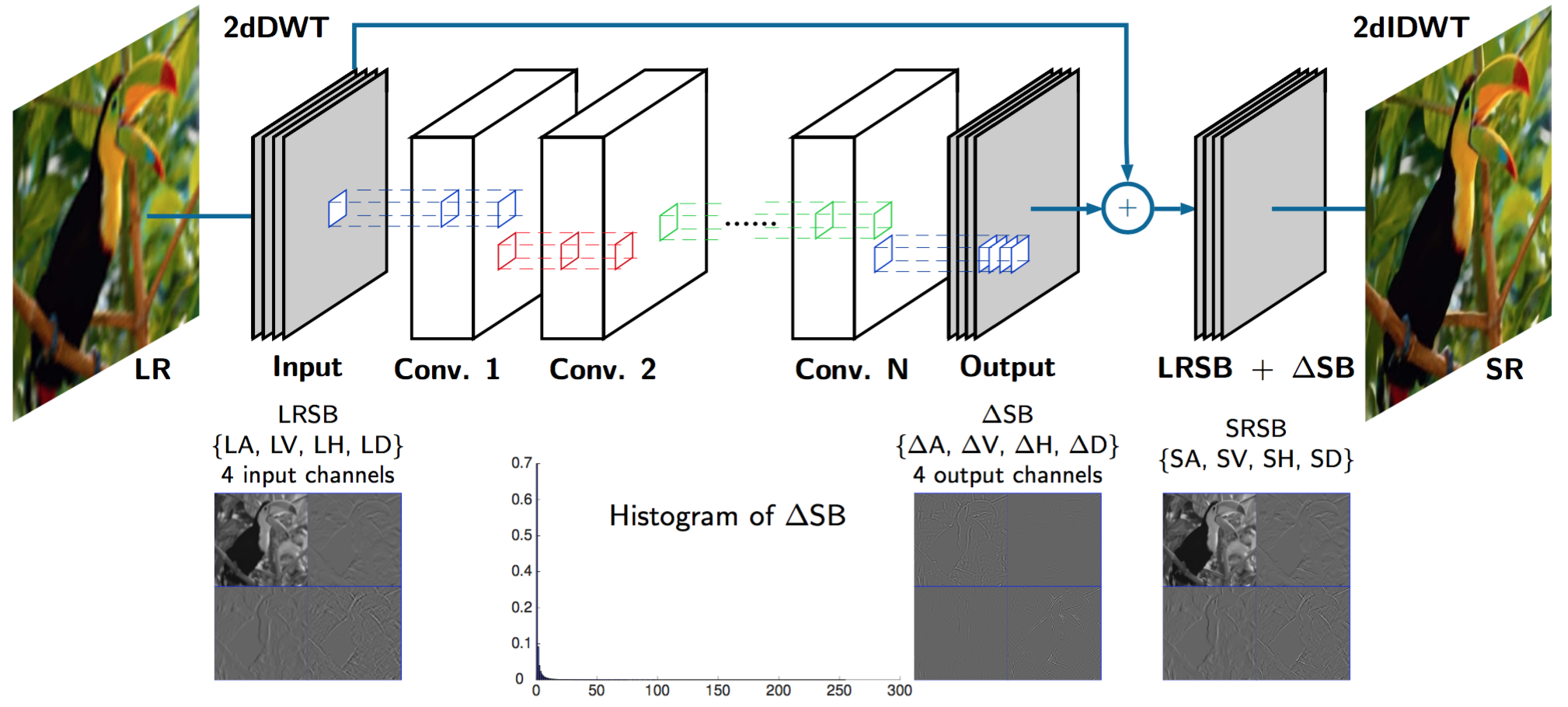

Network Structure

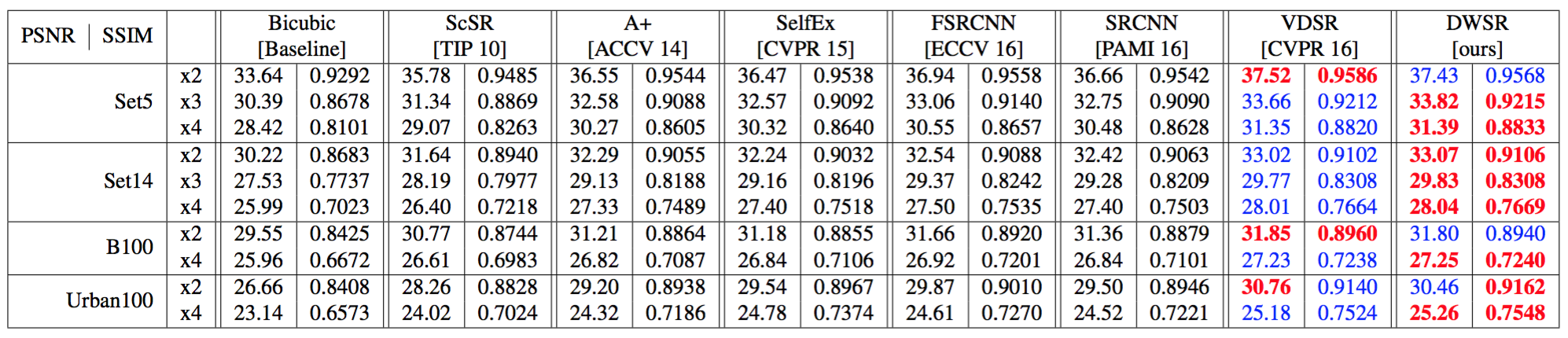

Evaluations

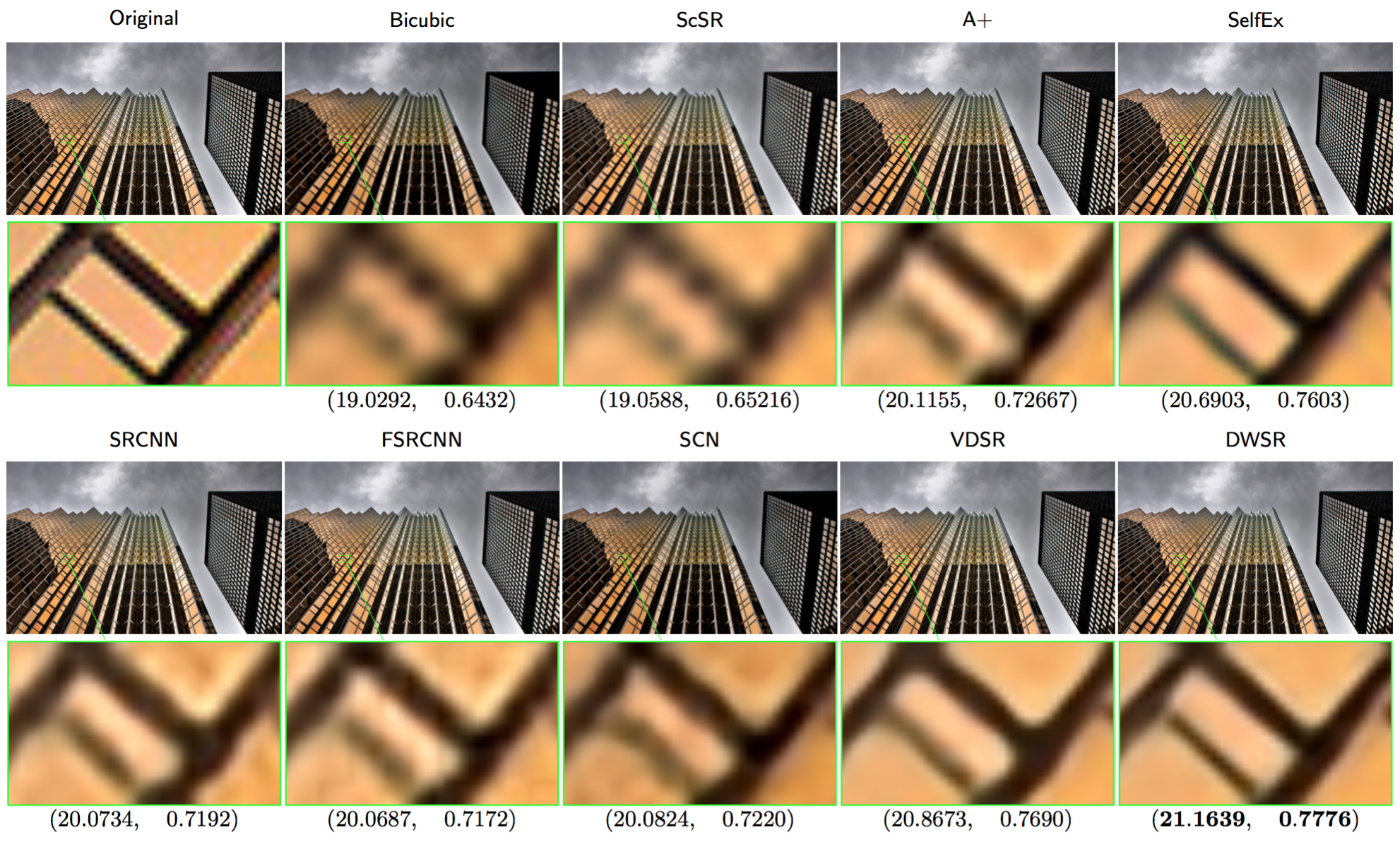

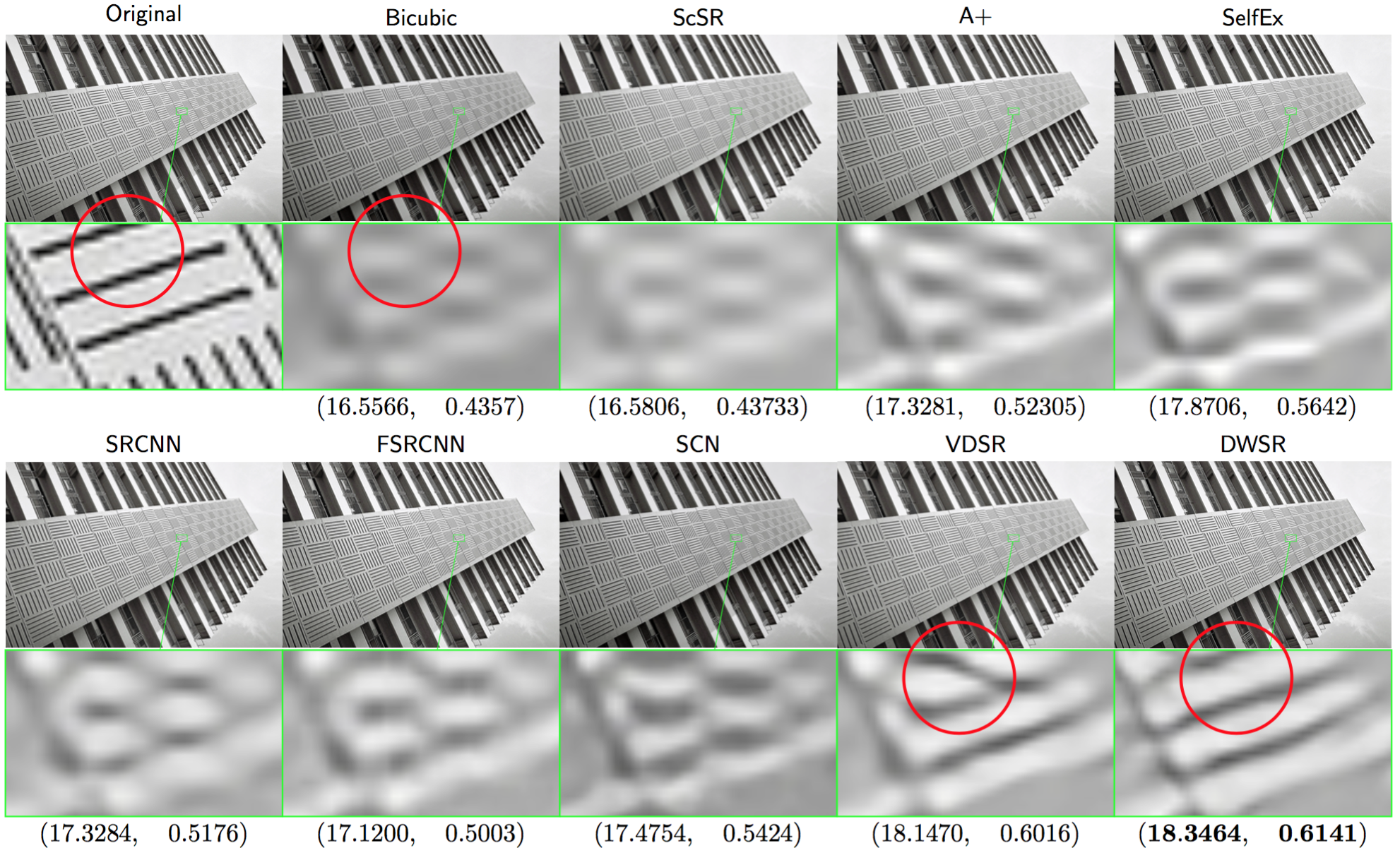

SR Results

Testing image 19 and 92 from dataset Urban 100. The assessments are displayed under the SR results from different methods as (PSNR, SSIM). DWSR produces best results with less artifacts.

Related Publications

Co-author of R. Timofte, E. Agustsson, L. Van Gool, M.-H. Yang, L. Zhang, et al., “Ntire 2017 challenge on single image super-resolution: Methods and results,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, July 2017. [CVF]

T. Guo, H. S. Mousavi, T. H. Vu, V. Monga, “Deep Wavelet Coefficients Prediction for Super-resolution,” IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, July 2017. [IEEE Xplore]

Selected References

J. Kim, J. K. Lee, and K. M. Lee, “Accurate image super-resolution using very deep convolutional networks,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR Oral), June 2016 [paper].

D. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014. [paper].

C. Dong, C. C. Loy, K. He, and X. Tang, “Learning a deep convolutional network for image super-resolution,” in Computer Vision–ECCV 2014, pp. 184–199, Springer, 2014. [paper].

C. Dong, C. C. Loy, and X. Tang, “Accelerating the super-resolution convolutional neural network,” in European Conference on Computer Vision, pp. 391–407, Springer, 2016.[ paper].

J.-B. Huang, A. Singh, and N. Ahuja, “Single image superresolution from transformed self-exemplars,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197–5206, 2015.[paper].

Z. Wang, D. Liu, J. Yang, W. Han, and T. Huang, “Deep networks for image super-resolution with sparse prior,” in Proceedings of the IEEE International Conference on Computer Vision, pp. 370–378, 2015. [paper].

K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778, 2016.[paper].