Code

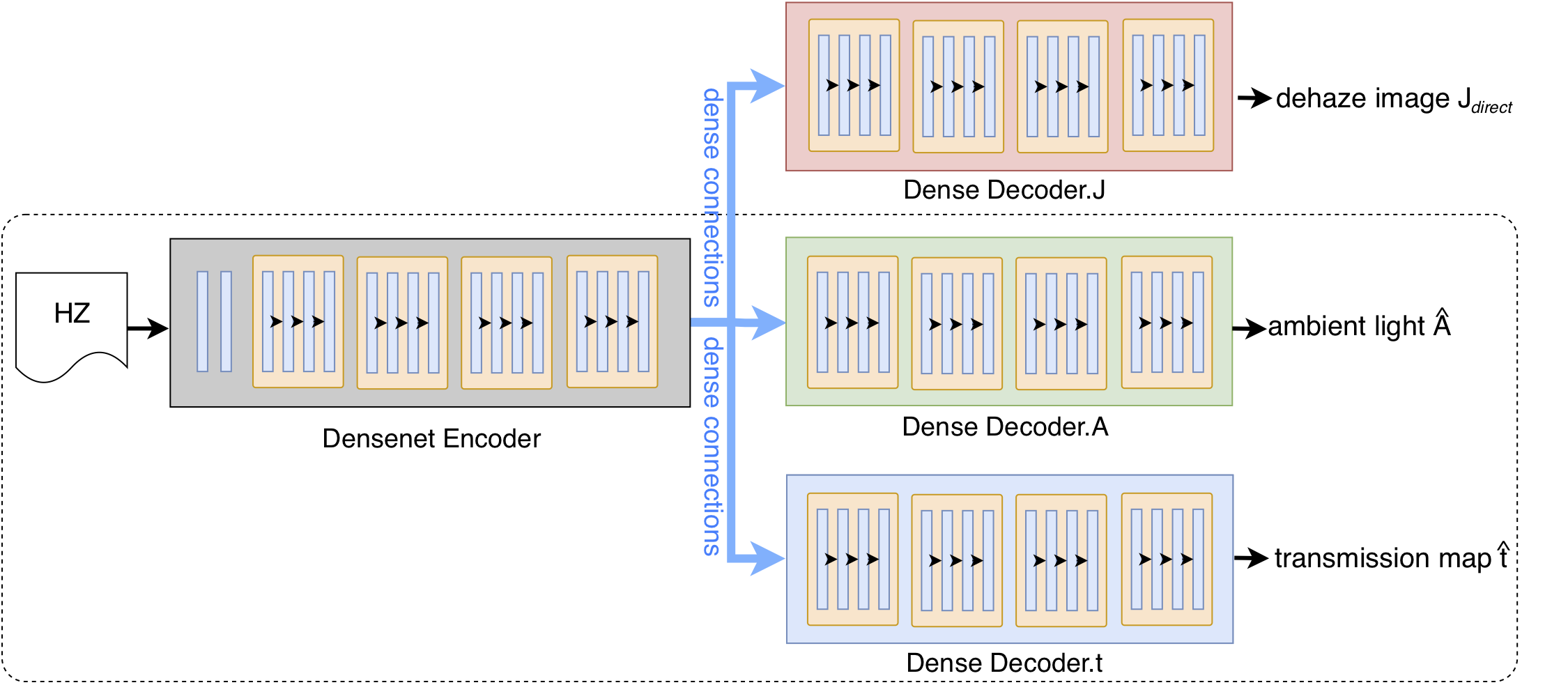

Network Structure

Dehaze Results

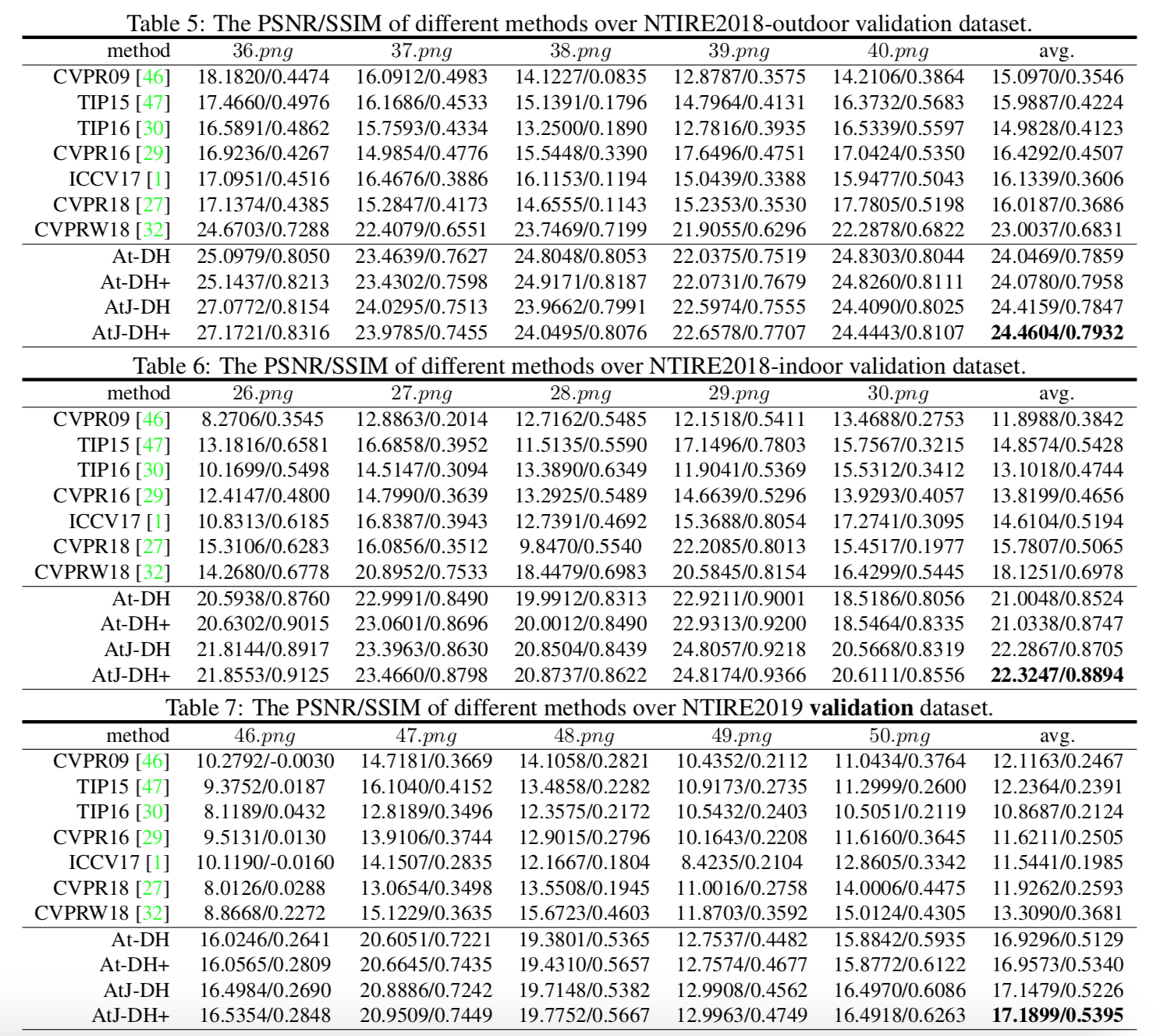

Evaluations

Related Publications

T. Guo, X. Li, V. Cherukuri, and V. Monga, “Dense Scene Information Estimation Network for Dehazing”, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2019. [PDF]

Co-author of C. O. Ancuti, C. Ancuti, R. Timofte et al., “NTIRE 2019 Challenge on image dehazing: Methods and results”, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2019. [CVF]

Selected References

C. O. Ancuti et al., “Dense haze: A benchmark for image dehazing with dense-haze and haze-free images,” arXiv preprint arXiv:1904.02904, 2019.

H. Zhang and V. M. Patel, “Densely connected pyramid dehazing network,” in Proc. IEEE Conf. on Comp. Vis. Patt. Recog., 2018, pp. 3194–3203.

H. Zhang, V. Sindagi, and V. M. Patel, “Multi-scale single image dehazing using perceptual pyramid deep network,” in Proc. IEEE Conf. Workshop on Comp. Vis. Patt. Recog., 2018, pp. 902–911.

C. O. Ancuti et al., “Color transfer for underwater dehazing and depth estimation,” in Proc. IEEE Conf. on Image Proc., 2017, pp. 695–699.